Authors: Kalyn Stricklin, Alex Almanza

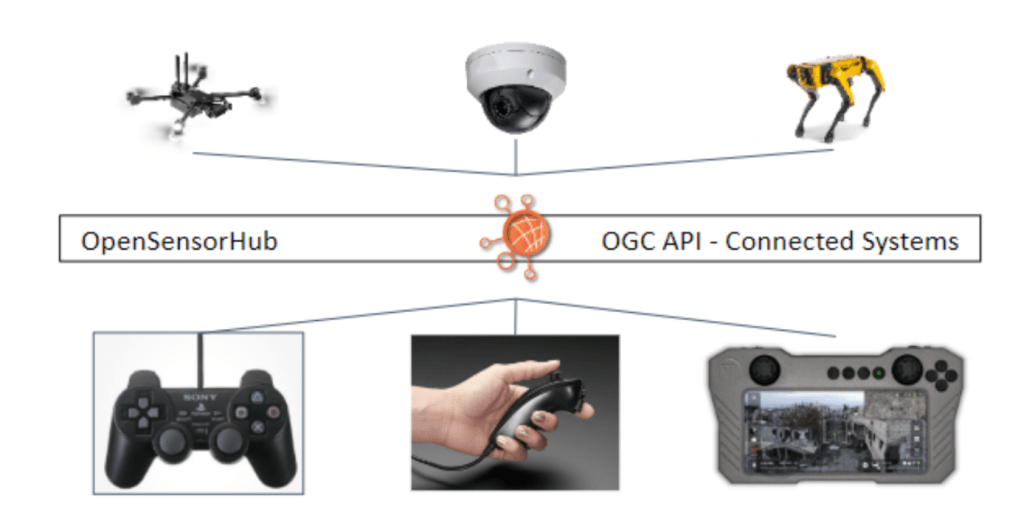

OpenSensorHub (OSH) has long been recognized for its ability to integrate and control a wide array of devices via a unified interface. From robotics to surveillance cameras to drones, OSH has enabled web-accessible services to simplify monitoring and tasking these sensors. With the diverse range of controllers available on the market, we explored the options of integrating a Universal Controller that is capable of managing various input devices seamlessly.

The Universal Controller is adept at handling multiple Human Interface Device (HID) compliant gamepads and Nintendo Wii controllers with nunchuck extensions. We have successfully tested this functionality with a variety of controllers, including the Xbox 360, Xbox One, PS2, PS3, Nintendo Switch Joycons, Nintendo Switch Wired controllers, and WiiMotes, with or without nunchucks. The Driver’s flexibility allows any connected controller to act as the ‘primary controller’, with additional options to customize hotkeys for easy switching between controllers and control streams.

Core Features of Universal Controller Driver:

- Multiple Controller Support: The Driver supports various gamepads and wii controllers ensuring broad compatibility across popular brands of controllers.

- Dynamic Switching: Users can switch the primary control role among connected controllers.

- Customizable Control: Hotkeys and combinations can be configured for swift transitions between control streams and controllers.

The advantages of the Universal Controller driver is that it is adaptable to each client. In the configuration panel, the client is able to choose between two types of controllers: generic gamepads and WiiMotes. This sets the connection between the OSH platform and the controller. Then the client can add preset configurations to each controller by selecting the gamepad components and then an action.

In the Controller Cycling Action list you are able to choose a button and map it to an action, and then assign this item to a controller index. This allows the controller to be adaptable to each user’s specific needs and usage, enhancing the overall flexibility and efficiency of the system. The Universal Controller facilitates an intuitive and responsive user experience allowing them to tailor the control streams to their operational requirements.

Using the SensorML process chains it allows the seamless device management and control of numerous sensor systems. Here is how it works:

- Using the universal controller, we can connect HID devices to capture the user inputs like button presses and joystick movements. These controllers are the starting point for our process chain.

- Once the universal controller captures the input from the device, it sends the data through the process and connects it to another sensor, say a PTZ camera. The process chain will interpret the controller input and translate it into specific movements of the camera; panning left or right, tilting up or down and zooming in or out.

- With process chains and the use of the Universal Controller we are able to switch between devices and sensors seamlessly. In the configuration of the controller, we are able to add a preset to be able to switch from controlling the PTZ camera to driving a robot to flying a drone, all with a simple press of a button on the gamepad.

Using SensorML process chains and the Universal controller we can create a highly flexible and responsive system that allows a single controller to manage and task multiple types of devices.

Android Compatibility with OSH-Android

Using OSH-Android and a simple Java driver to map Android-attachable game controllers, we can integrate OSH, Android, physical controls, and an OSH-based client for visualizations. This means that we can have our controller and our client on the same system, all running OpenSensorHub! Does this seem familiar?

The easy-to-use intelligent systems implemented by OpenSensorHub allow us to emulate other commercial systems such as the Tomahawk Ecosystem, yet all in the open-source space. The great thing about OpenSensorHub and OGC API – Connected Systems is that this OSH ecosystem has unlimited potential and unlimited interoperability. Connected Systems allows this control-based ecosystem to interact with other OSH nodes and ecosystems around the globe, enabling the use of large-scale analytics, AI/ML, enhanced data processing, and infinitely more possibilities.

Version 1.3.2 of OSH has been released with many bug fixes and enhancements to the OGC service interfaces and security. This version is available for download as a single zip archive from

Version 1.3.2 of OSH has been released with many bug fixes and enhancements to the OGC service interfaces and security. This version is available for download as a single zip archive from