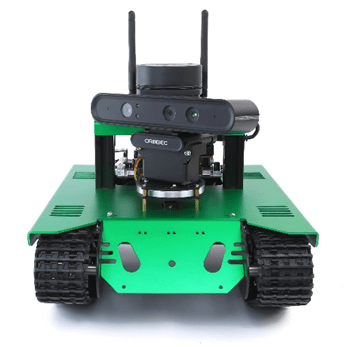

In the past, we wrote a brief article on OpenSensorHub (OSH) integration with robotics platforms where we used an inexpensive STEM platform (“Yahboom G1 AI vision smart tank robot kit with WiFi video camera for Raspberry Pi 4B”), stripped the software and wrote a completely OSH centric web-accessible services solution for monitoring and tasking the robot. The entire software stack was hosted on the on-board Raspberry Pi. However, we were lacking the location-enabled and geographically aware aspects to this solution simply because we did not equip it with its own GPS unit. We also explored running OSH on Nvidia Jetson cards and making use of the GPUs to improve the processing necessary to augment sensor observations with SensorML process chains making use of artificial intelligence, machine learning, and computer vision. The ideal solution would be to combine the capabilities of the Robot Operating System (ROS) with OSH to create truly location-enabled, geographically aware, web-accessible robotics platform powered by Nvidia Jetson single board computer (SBC). To this end we acquired another, albeit more expensive but not prohibitively so, STEM robotics platform – The Yahboom Transbot ROS package. This system includes a SLAMTEC RP-Lidar A1 (2-dimensional), an Orbbec Astra Pro (RGB and Depth Camera), a wireless PS-2 like controller, and an Nvidia Jetson Nano with a hardware interface board. This package includes prebuilt ROS-1 packages on Ubuntu 18.02 Linux and an optional downloadable smart phone app. To complete the solution, we added a compatible GPS module that could be connected via USB to the SBC.

Read More “On Robotics Applications as Connected Systems with OpenSensorHub and ROS”